BirdEye - an Automatic Method for Inverse Perspective Transformation of Road Image without Calibration

09 Jul 2015Abstract

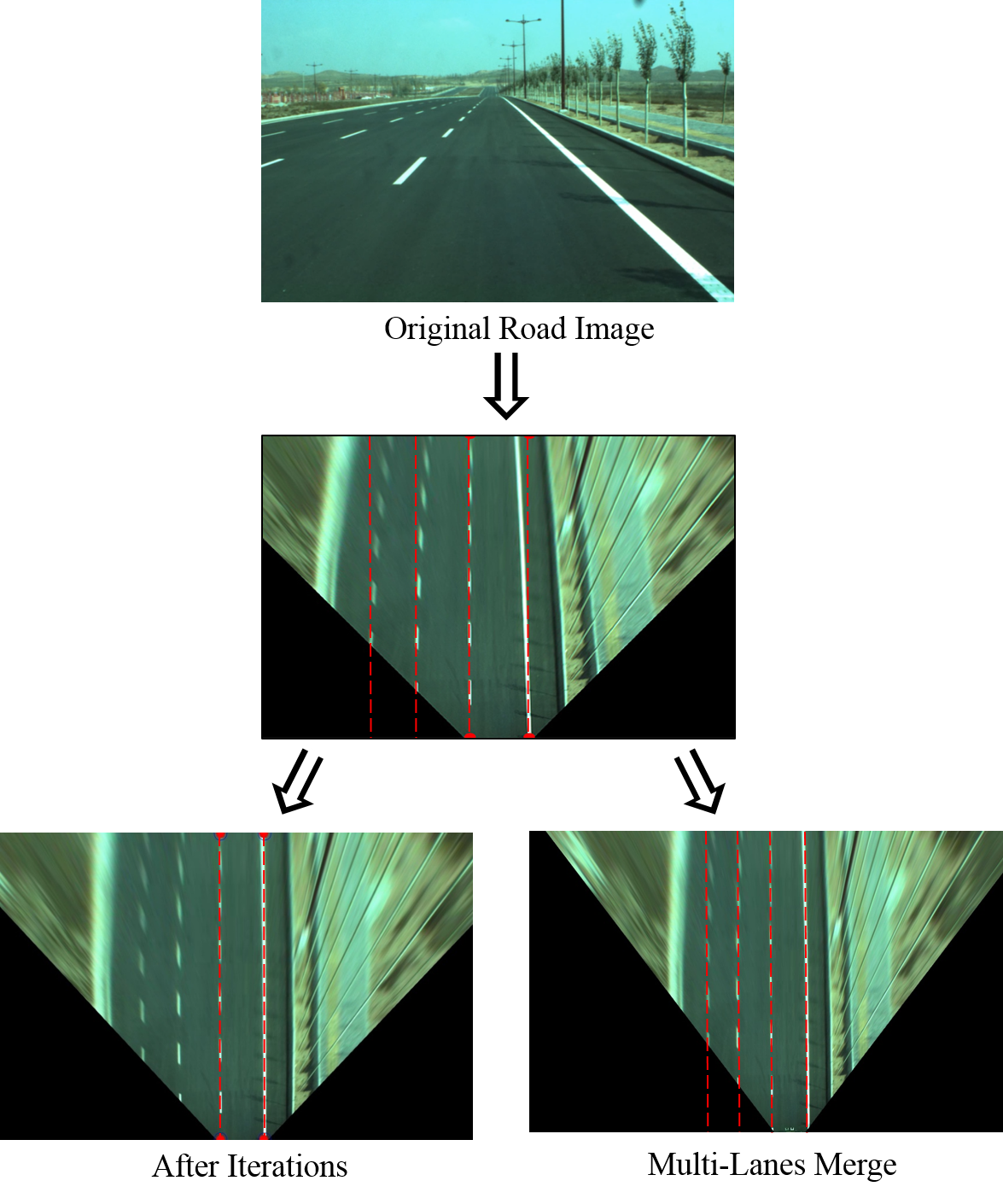

Inverse Perspective Mapping(IPM) based lane detection is widely employed in vehicle intelligence applications. Currently, most IPM method requires the camera to be calibrated in advance. Any shifts of camera will lead to the failure of the previous calibration. In this work, a calibration-free approach is proposed to iteratively attain an accurate inverse perspective transformation of the road, through which a “birdeye” view of the target road, as well as parallel lanes, can be gained. This method obtains 4 end-points of a pair of lanes in perspective image by our lane detection algorithm first. Based on the hypothesis that the road is flat, we project these points to the corresponding points in the IPM view in which the two lanes are parallel lines to get the initial transformation matrix. Then, we use an iteration strategy to increase IPM’s accuracy by minimizing the detection error between the lines in two views. After the iteration stops, the better transformation matrix is used for final IPM. Besides, to attain a better IPM view in which every lane approaches parallel, we propose a multi-lanes based IPM which involve more lanes. The results of several experiments are provided to demonstrate the effectiveness of the method.

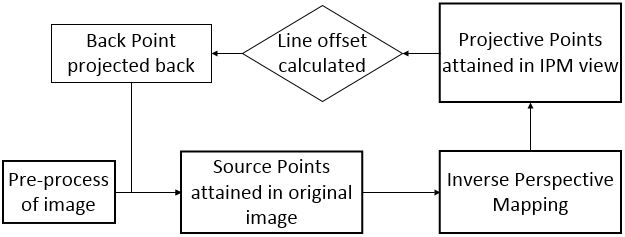

Algorithm Flow Chart

Algorithm Overview

Inverse Perspective Mapping

In computer vision, the mathematical relationship between two planes is defined as a homography matrix H. As explained in [1], the matrix H can be expressed as H = sMR. We can attain the transformation relationship between two planes by 8 corresponding points, 4 points in each plane. The image below illustrates the process.

Pre Process

Denoising based on Lane’s characteristic

The lanes in perspective view possess some different feature from other lines, such as the lanes’ angles are always within a certain range. Therefore, we need to set a threshold to filtrate all the lines, wiping off nearly-horizontal and nearly-perpendicular lines. Moreover, the noise lines are minimal and separated in different frame of the road record. It is also useful to filter out the lines which are minority based on their angles from the total lines gathered in a number of frames. The rest lines are the lanes we want.

Bisect-Kmeans to Distinguish Different Lanes

Real road condition is complex, we can hardly know how many lanes in the road, which will cause great trouble to our process. Therefore, it is necessary to cluster the lines in time. In this paper we put forward a fast method especially aimed at lane detection to get the number of the clusters. In real road image, the horizontal ordinate of points which lines cross the upper screen possess big difference from each other. Therefore, we can perform a fast bisect-Kmeans based on crossing points: divide all the lines in two part with Kmeans, and calculate each cluster’s variance also based on the the horizontal ordinate of the crossing points. If its variance is bigger than a certain threshold, keep Kmeans(K=2) it.

Iterative Method fo IPM

After the first-time IPM, we detect the IPM view to locate the lanes’ end points (we name them “project points”), which should be the real corresponding points of perspective view’s end points. Then transform the “project points” back into the perspective view as “back points”. However, there maybe some inaccuracy in our IPM view’s detection, thus we can not use the back points to replace the source points. Instead, we combine the back points with the previous source points to get new source points (The combining method can be various: predict-combine or weights-combine), then use these new points to start iteration. Therefore by iteration, more accurate IPM view can be attained.

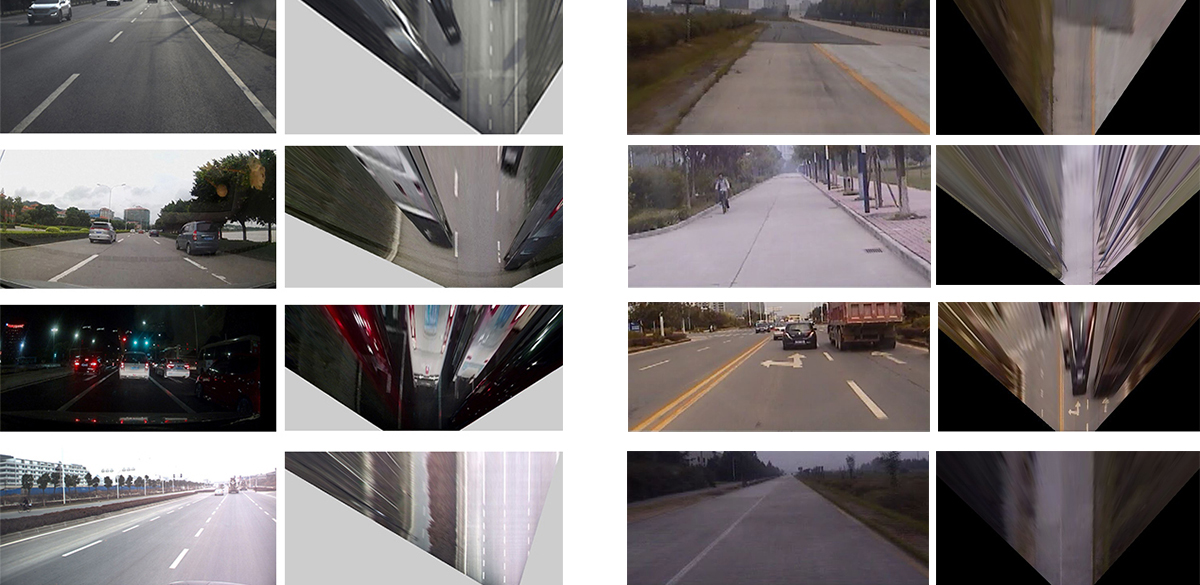

Experiment

Reference

[1] Bradski,G,”Learning OpenCV”,O’REILLY,2008